The AI Language Journey - From Characters to Agentic Minds

Content

- Overview

- The Dawn of Text Processing: Character by Character

- Building Blocks of Meaning: Bags and Frequencies

- The Rise of Neural Networks: Learning Connections

- The Transformer Revolution: Parallel Processing Power

- Generative Models: From Understanding to Creating

- The Present(not future) is Agentic: AI with Purpose

- The Road Ahead

- References and Credits

Overview

Have you ever wondered how your phone suggests the perfect word, or how a chatbot seems to understand your every query, no matter how convoluted? We’ve come a long way from rudimentary spell checkers to AI systems that can write poetry, summarize dense articles, and even plan your next vacation. This is the incredible journey of Artificial Intelligence in language processing, a journey we’re about to embark on, moving from the simplest character manipulations to the sophisticated world of Agentic AI.

The Dawn of Text Processing: Character by Character

In the early days, AI’s interaction with text was incredibly basic, often focusing on individual characters or simple patterns. Imagine trying to understand a book by only looking at one letter at a time – it’s a monumental task! Early techniques involved:

- Character Processing: At its most fundamental, this involved treating text as a sequence of individual characters. Algorithms might look for specific character combinations or manipulate text based on these units. Think of simple find-and-replace functions or basic string matching.

While foundational, character-level processing alone couldn’t grasp the meaning behind words or sentences.

Building Blocks of Meaning: Bags and Frequencies

To move beyond individual characters, AI needed to start understanding words. This led to the development of techniques that focused on the presence and importance of words within a document.

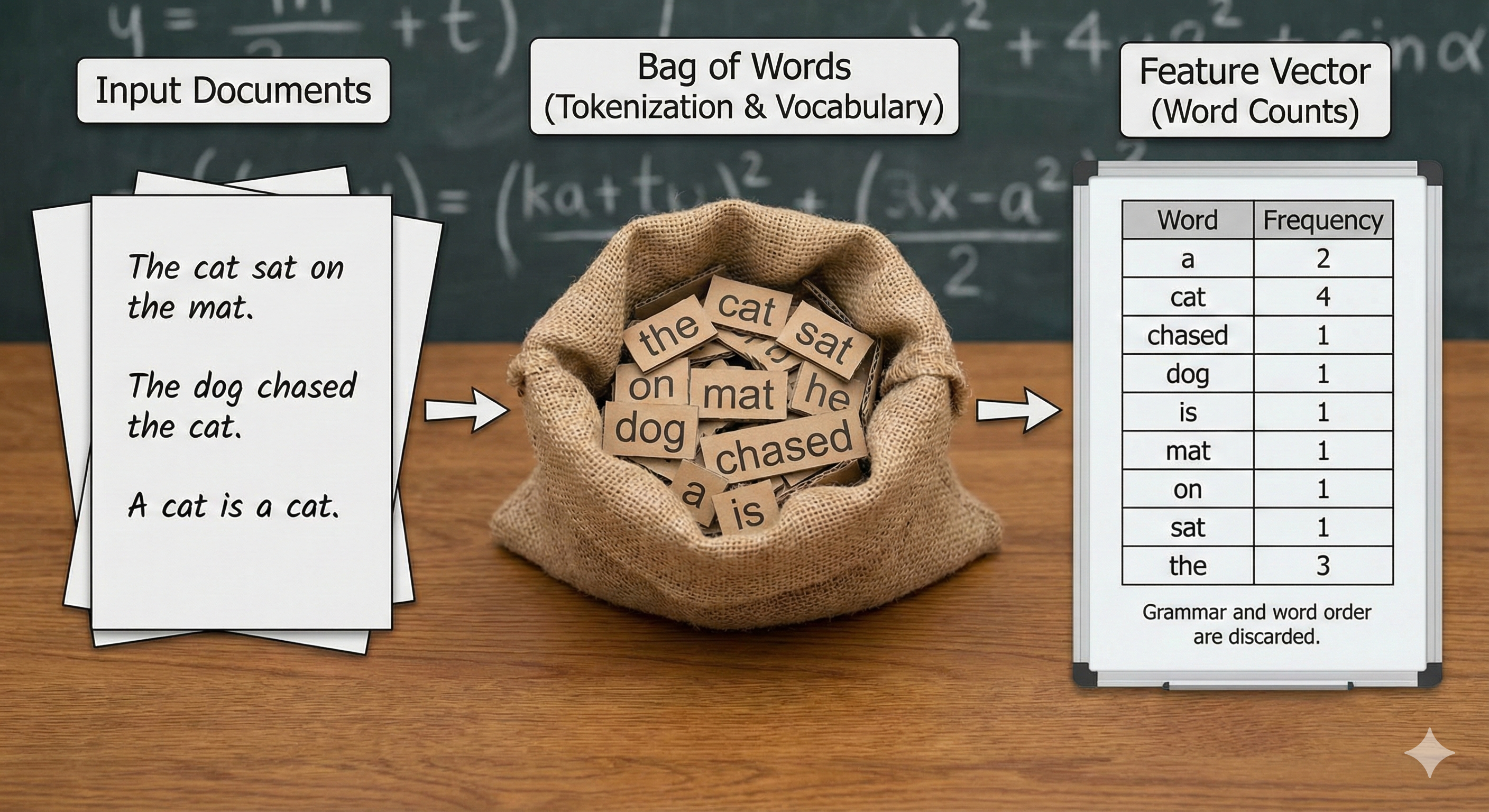

- Bag of Words (BoW): Imagine emptying all the words from a document into a

bagwithout caring about their order. That’s the essence ofBoWThis model represents text as an unordered collection of words, often represented as a vector where each dimension corresponds to a unique word in the vocabulary, and the value indicates its frequency.

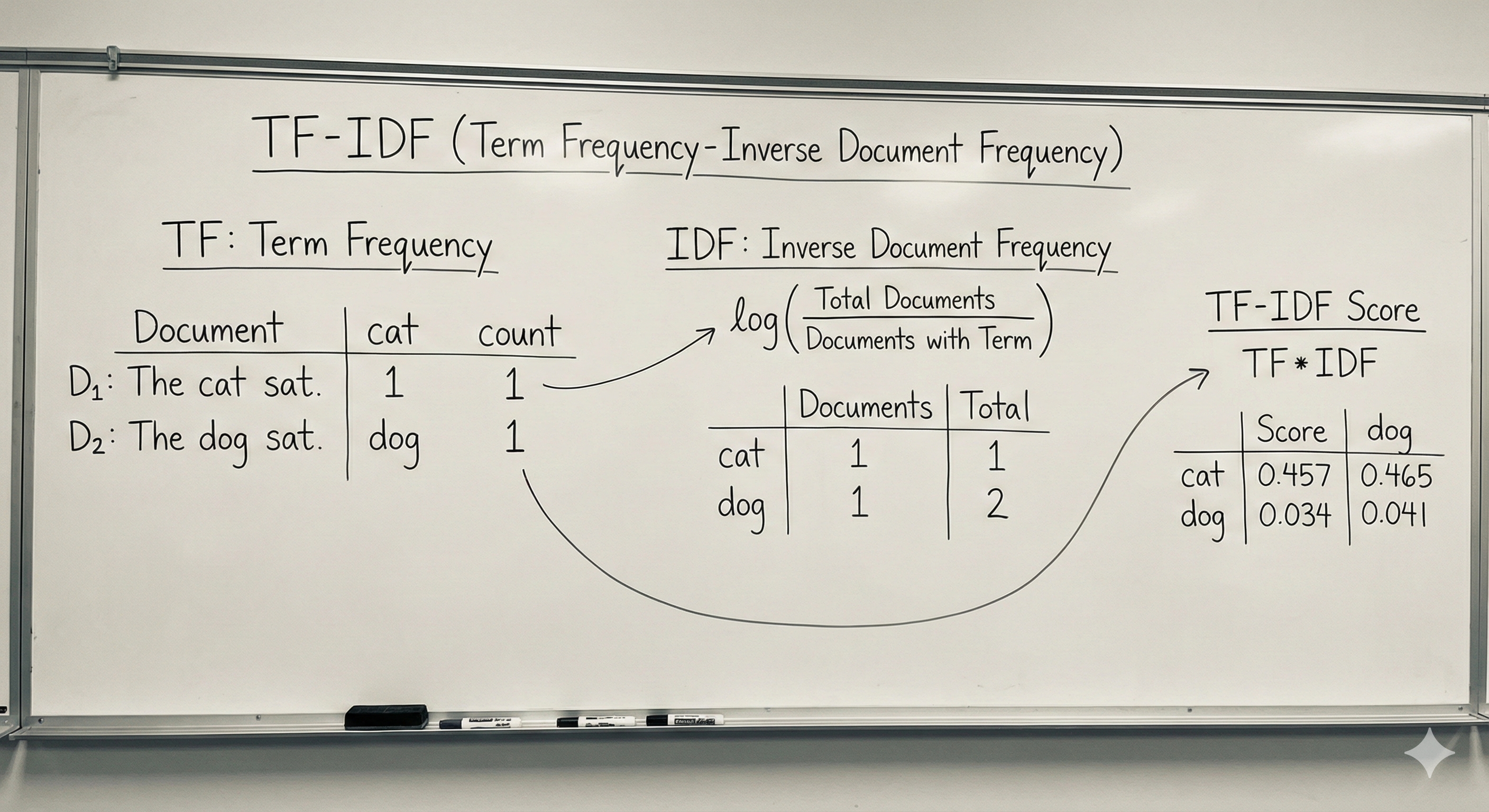

- TF-IDF (Term Frequency-Inverse Document Frequency): While

BoWtells you if a word is present and how often, TF-IDF goes a step further by assessing its importance.- Term Frequency (TF): How often a word appears in a specific document.

- Inverse Document Frequency (IDF): How rare or common a word is across all documents. Words like “the” or “a” appear frequently everywhere and thus have a low IDF, making them less distinctive.

TF-IDF helps identify words that are significant to a particular document, making it useful for information retrieval and text summarization.

These methods were crucial for tasks like spam detection and basic search, but they still struggled with understanding context, nuances, and the order of words.

The Rise of Neural Networks: Learning Connections

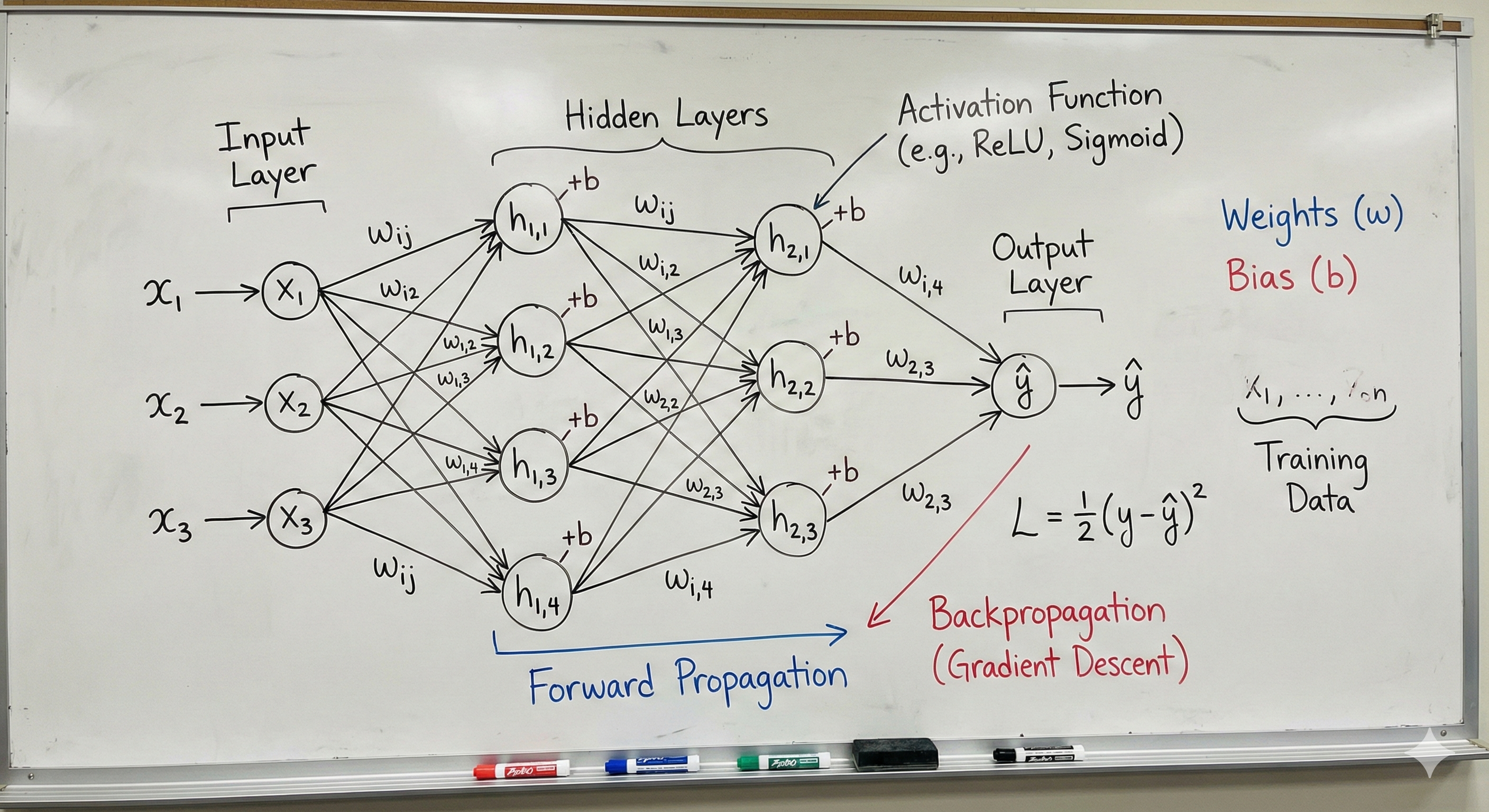

The advent of neural networks marked a paradigm shift. Inspired by the human brain, these networks could learn complex patterns and relationships in data.

Neural Networks (NNs): At their core, NNs consist of interconnected neurons organized in layers. They learn by adjusting the strength of these connections (weights) as they process data, allowing them to map inputs to outputs. For text, this meant learning to associate words and phrases with specific categories or meanings.

Remembering the Past: Recurrent Neural Networks (RNNs)

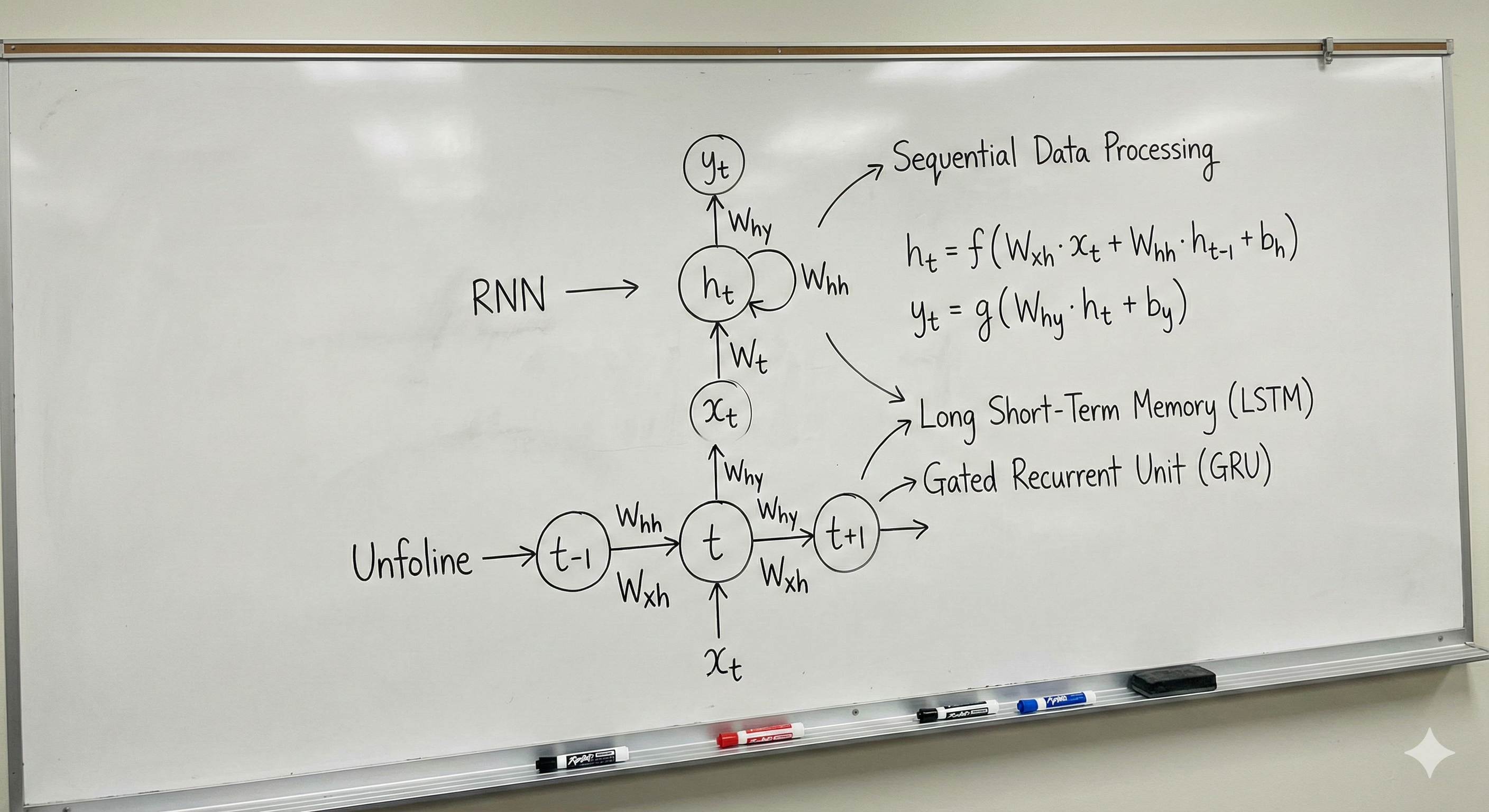

- For language, sequence matters. The meaning of a word often depends on the words that came before it. This is where Recurrent Neural Networks (RNNs) shone.

- Recurrent Neural Networks (RNNs): Unlike standard NNs, RNNs have loops that allow information to persist from one step of the sequence to the next. This

memorymade them ideal for tasks like language translation and speech recognition, where understanding the flow of information is crucial.

However, RNNs struggled with long sequences, often forgetting information from the beginning of a text as they processed towards the end. This is known as the vanishing gradient problem.

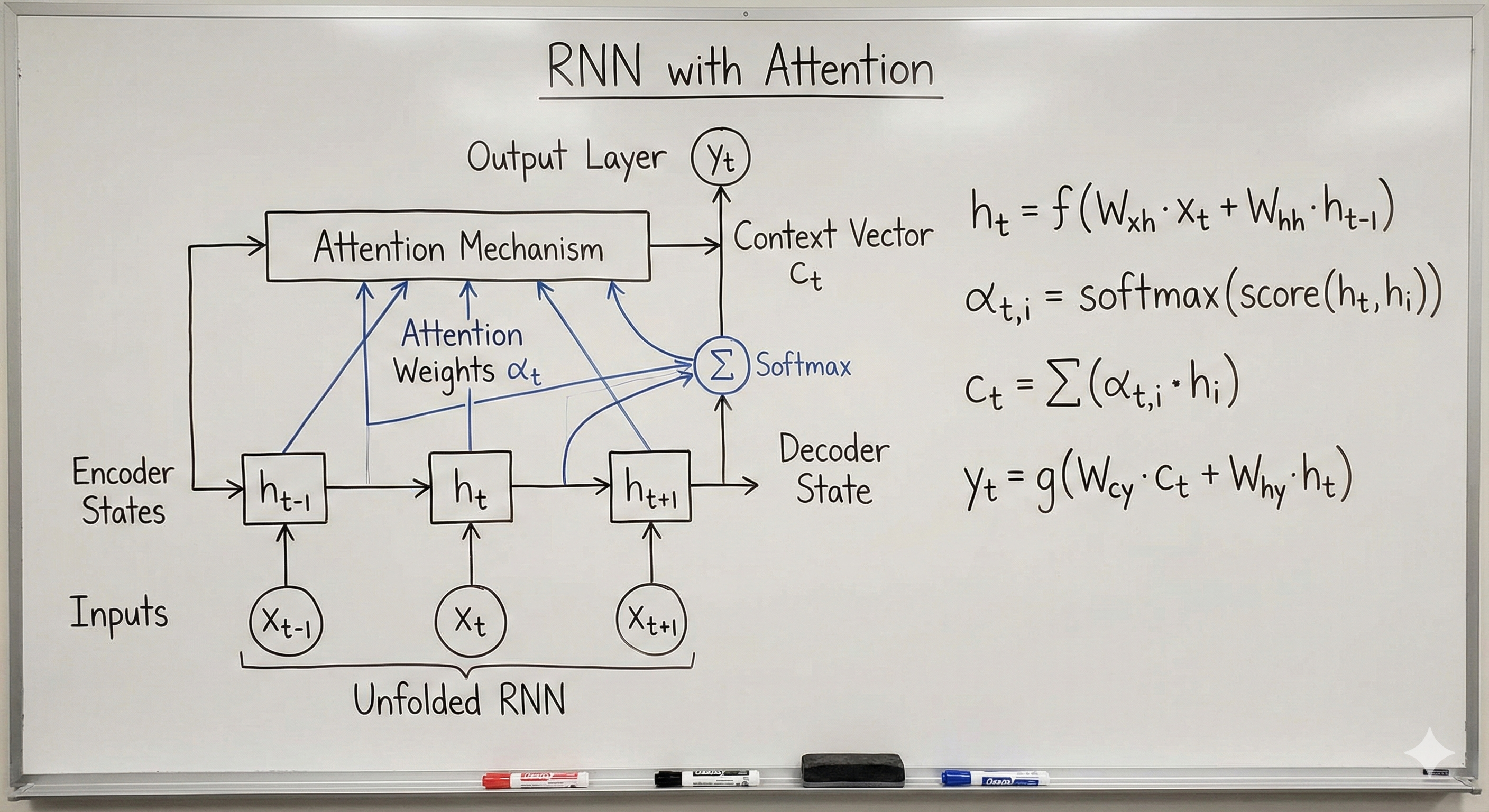

- RNNs with Attention: To overcome this limitation, the

attention mechanismwas introduced. This brilliant innovation allowed RNNs to focus on the most relevant parts of the input sequence when making a prediction, no matter how far apart they were. Imagine reading a book and being able to instantly recall a specific detail from an earlier chapter – that’s what attention enabled for RNNs.

The Transformer Revolution: Parallel Processing Power

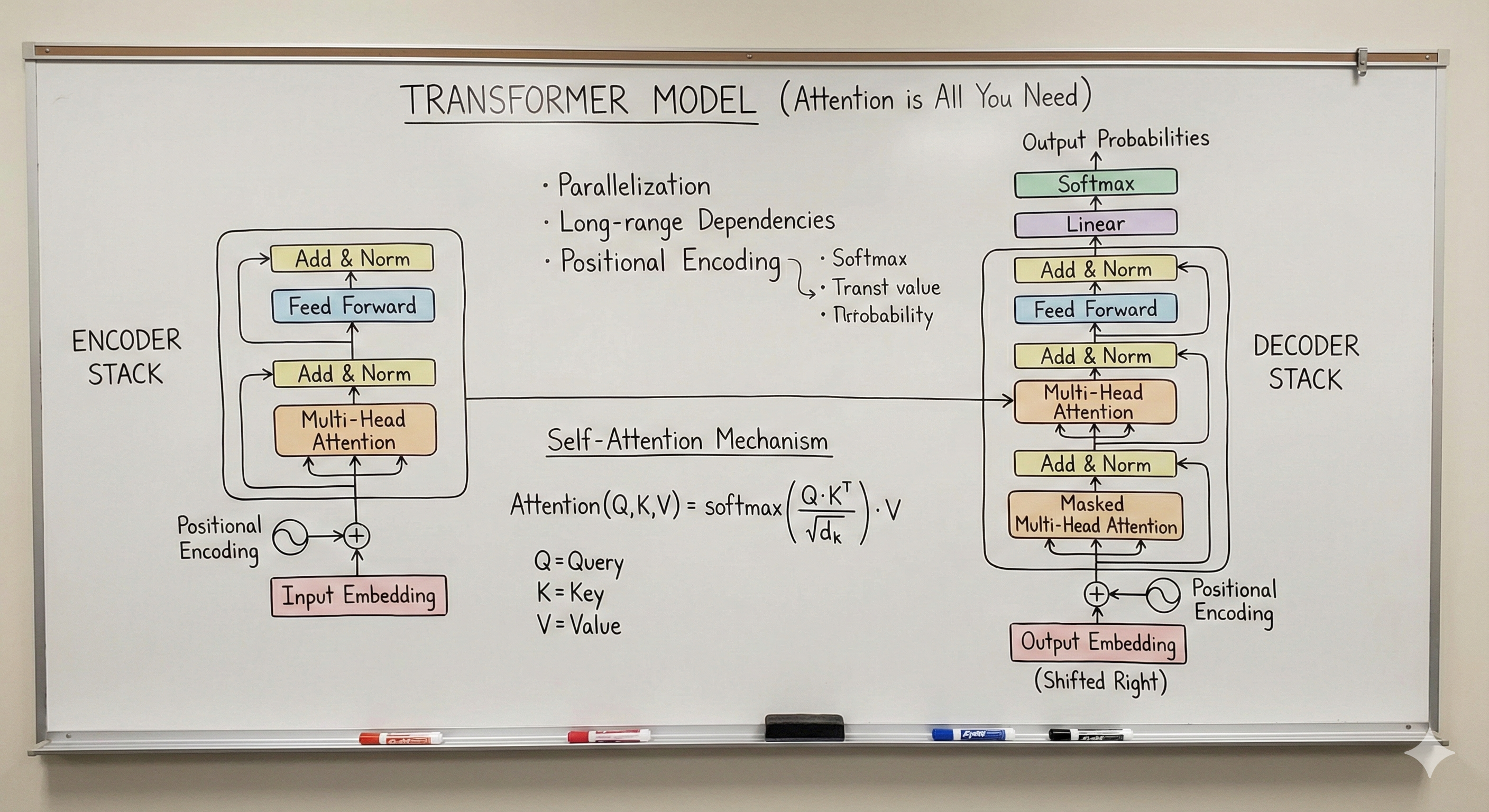

While attention improved RNNs, they were still sequential in nature. The next big leap came with the Transformer architecture.

- Transformers (and BERT): Introduced in 2017, Transformers completely abandoned recurrence and convolutions, relying solely on self-attention mechanisms. This allowed them to process all parts of an input sequence in parallel, dramatically increasing training speed and enabling them to handle much larger datasets.

One of the most influential Transformer-based models wasBERT (Bidirectional Encoder Representations from Transformers). BERT revolutionized how AI understood context by pre-training on vast amounts of text data to predict masked words in a sentence and determine if two sentences were related. This bidirectional understanding allowed it to grasp the full context of a word, considering both what comes before and after.

Generative Models: From Understanding to Creating

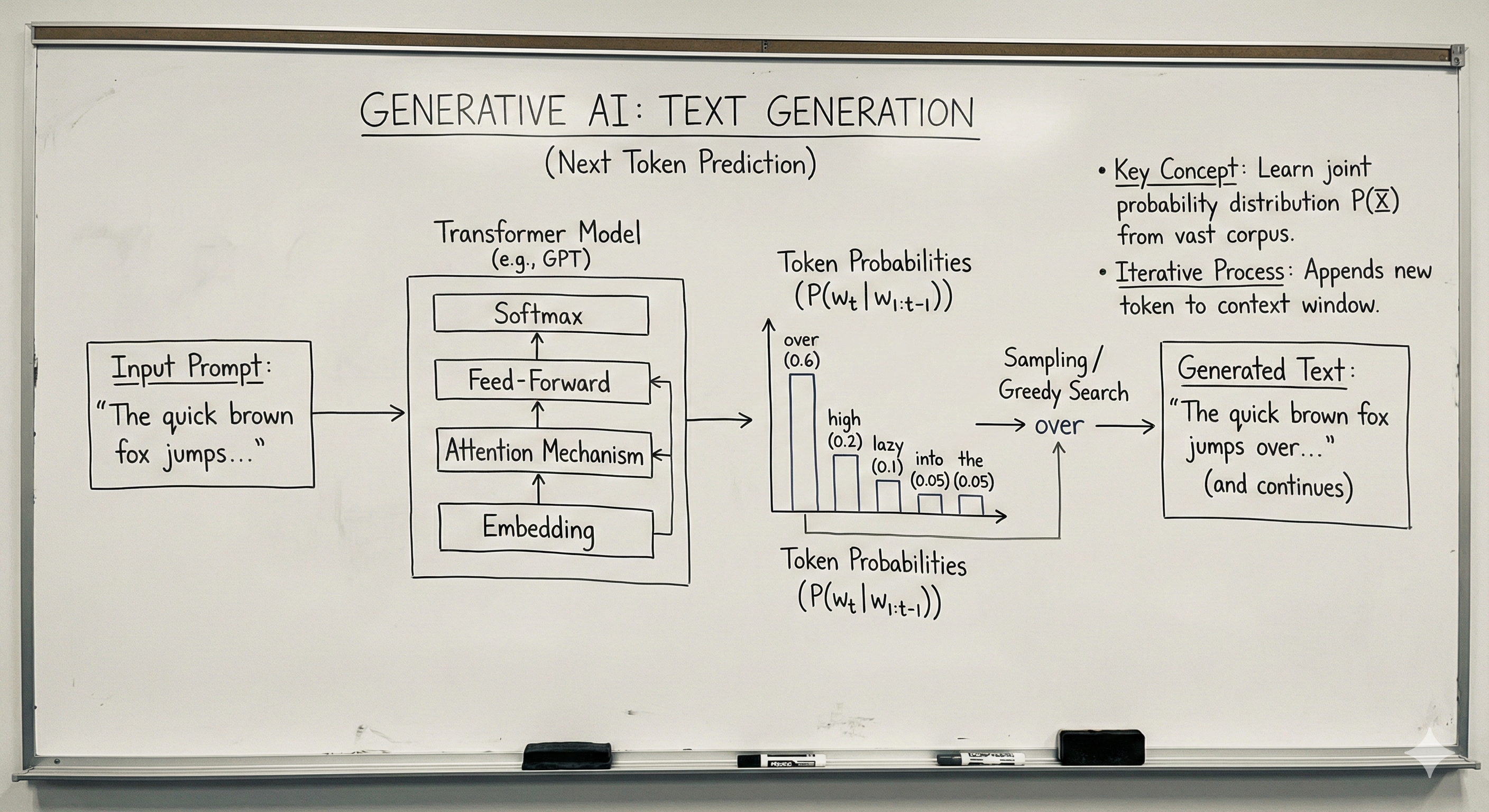

With Transformers, AI wasn’t just understanding language; it was starting to generate it in increasingly sophisticated ways.

- Generative Models (ChatGPT, Gemini): These models, often built upon the Transformer architecture, are trained on colossal amounts of text data to predict the next word in a sequence. This seemingly simple task allows them to generate coherent, contextually relevant, and remarkably human-like text.

- ChatGPT (OpenAI): A household name, ChatGPT captivated the world with its ability to engage in dynamic conversations, write essays, generate code, and much more. Its success largely stems from its vast training data and fine-tuning for conversational tasks.

- Gemini (Google DeepMind): Representing the cutting edge, Gemini is designed as a multimodal model, meaning it can understand and operate across different types of information, including text, code, audio, image, and video. This allows for a much richer and more integrated interaction with AI.

The Present(not future) is Agentic: AI with Purpose

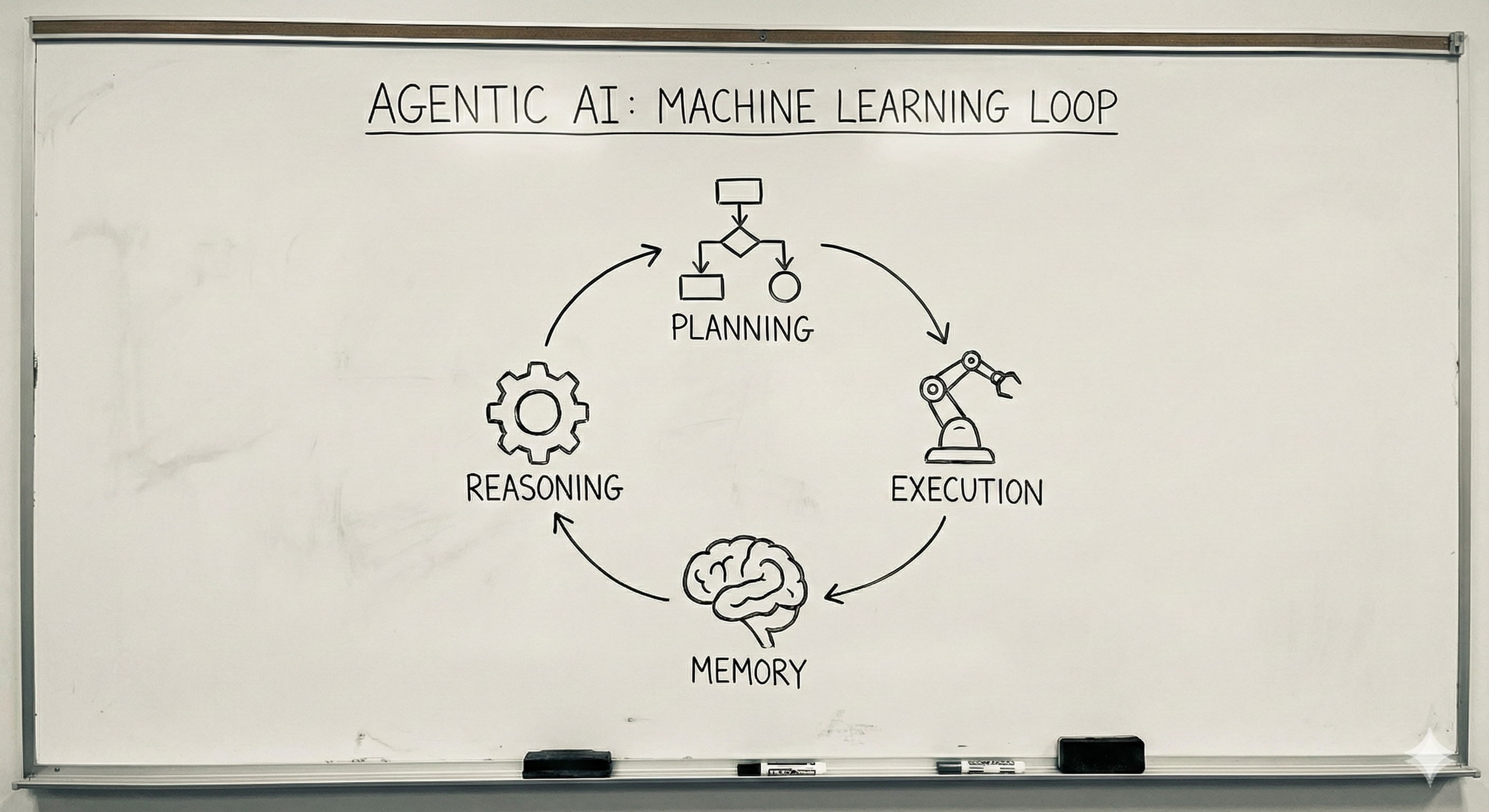

The latest frontier in AI is Agentic AI, moving beyond simply generating text to enabling AI to plan, reason, and execute complex tasks.

- Agents and Agentic AI: An AI agent is a system that can perceive its environment, make decisions, and take actions to achieve specific goals. This involves:

- Planning: Breaking down a complex goal into smaller, manageable steps.

- Reasoning: Using logic and knowledge to determine the best course of action.

- Execution: Carrying out the planned steps, often by interacting with various tools or systems.

- Memory: Remembering past interactions and learning from experiences to improve future performance.

Imagine an AI that doesn’t just suggest a vacation spot, but acts as your personal travel concierge. Once you give it a destination and a budget, the agent autonomously checks flight prices across dozens of sites, monitors hotel availability for the best reviews, and cross-references your calendar to find the perfect dates.

It doesn’t stop there: it can automatically book the tickets (within your pre-set guardrails), build a minute-by-minute itinerary based on local weather forecasts, and even proactively re-book your connecting flight if it detects a delay before you’ve even reached the airport. That is the essence of agentic AI moving from being a chatbox that gives advice to a system that takes action to solve real-world problems.

The Road Ahead

The journey of AI in language processing has been nothing short of astonishing. From counting words to creating art and taking action, we’ve witnessed a rapid evolution. We’re now on the cusp of an era where AI can not only understand and generate language but also interact with the world in meaningful, purposeful ways.

The future of Agentic AI promises to redefine how we work, learn, and live, making complex tasks simpler and unleashing new possibilities for innovation. While challenges remain, the progress so far is a testament to human ingenuity and the boundless potential of artificial intelligence.

References and Credits

- Transformers Paper

- Image credits: https://gemini.google.com/